How to Spot AI Hallucinations

Ever heard of an AI hallucination? No, robots aren't daydreaming about pizza or unicorns (though that would be cool!). An AI hallucination happens when an AI makes stuff up—like a super-confident friend who insists penguins live in Texas. Spoiler alert: they don't!

AI tools, like ChatGPT, can sometimes give you answers that sound perfect but are totally wrong. They're not lying—they just get confused sometimes.

Why Does This Happen?

AI learns from tons of information. Sometimes, it's trying so hard to answer you that it guesses the next best word or sentence—even if it's totally made up. Imagine answering a question from someone where you have no idea about that topic … so you just say something that sounds right. That's what AI does too!

How to Catch an AI in the Act

- Check with a Real Source: If the AI says cats run the government, maybe Google that.

- Weirdly Specific Info: AI might confidently tell you Albert Einstein invented tacos in 1912. Interesting…but nope!

- Crazy Contradictions: If one minute the AI says the moon is cheese and the next it's a rock, you've caught it hallucinating!

- Trust Your Gut: If something sounds fishy (like an AI friend telling you elephants fly at night), question it.

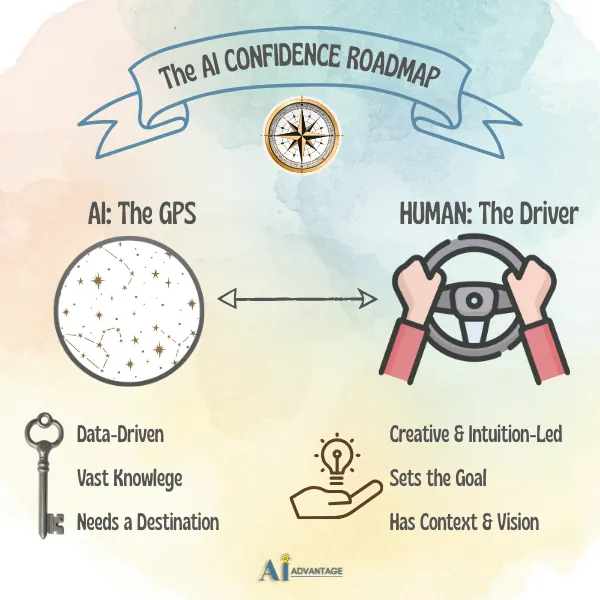

Remember the Golden Rule

AI is awesome but not perfect. It's like your quirky friend who sometimes tells wild stories—fun, helpful, but occasionally totally off. Always double-check anything super important.

And now, if you'll excuse me, my AI just said dolphins are joining the president's cabinet. I better look that up!

Share Your Thoughts

Have a question or want to share your experience? I'd love to hear from you.