"Pay No Attention to the Fake News Behind the Curtain"

Welcome to the AI ADVANTAGE blog! It's Carol here, and I'm so glad you're joining me.

I get lots of questions about AI from people in my workshops – and in everyday conversations. Whether you're "AI Curious" or "AI Competent" I know there will be something here for you.

We'll start this series with a bit of education about "fake news" and "fake images" and how to identify them. As always, I love to hear from you. Tell me what YOU think about this topic!

We're definitely all concerned about being 'duped' by fake news and images. It doesn't take much to be taken in by something that sounds legit but turns out to be a deepfake. And in some cases, it can actually be dangerous.

So here are just a few things you and I can look for to counteract being taken in:

How to Spot Fake News

Emotional Overload Warning:

- Does the headline scream at your emotions (anger, fear, or outrage?) Fake news often counts on emotional responses to bypass rational thought.

Double-Check the Source:

- If you've never heard of the website, do a quick search to see how trusted it is. Tools like Snopes & FactCheck.org can make fact checking quick and painless. (Links below)

Look for Typos and Odd Formatting:

- Professional sources care about quality. Fake news? Not so much. When you see Amazon.com.co it might be easy to skim right over it. But, of course, that's not an accurate web address.

What About Fake Images?

Reverse Image Search:

- Upload the suspicious photo to Google Images or TinEye and quickly see if it's real, doctored, or stolen from somewhere else.

Zoom in and Look Carefully:

- Fake images often reveal themselves in weird shadows, distorted proportions, blurry edges, or odd reflections. If something seems off, it probably is.

Context Clues:

- Does the image match the caption or description logically? A snowstorm in July or giraffes strolling through downtown Paris? It's probably fake (and a little amusing).

Still Unsure? Here Are Trustworthy Resources to Bookmark:

Remember, being skeptical is healthy, but it doesn't mean everything online is fake. These tools and techniques will help you navigate the digital landscape with confidence rather than fear. The most powerful tool? Your own critical thinking combined with these verification methods.

Stay curious, stay informed, and when in doubt, check it out!

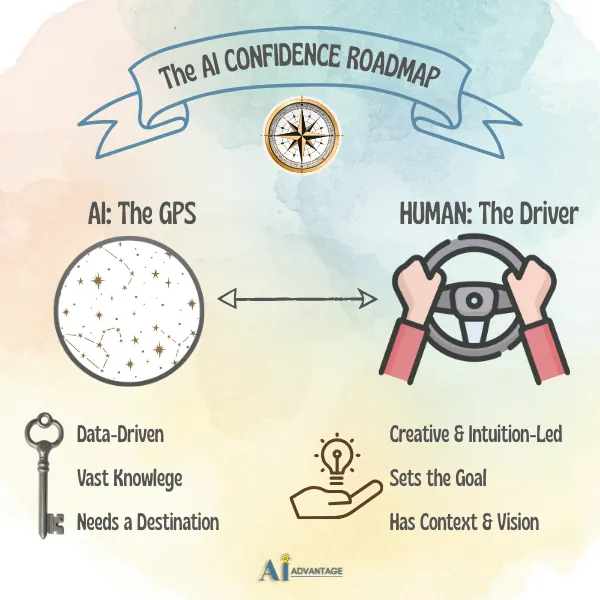

AI ADVANTAGE can help you move from curious to competent with one of our AI training workshops.

Making AI accessible, understandable and useful.

Share Your Thoughts

Have a question or want to share your experience? I'd love to hear from you.